Join us

The Tech&People group is always on the lookout for enthusiastic candidate members with multidisciplinary backgrounds. In our work, we embed ourselves in the contexts we are designing for (formative studies1), we design and build web2, mobile3, wearable4, robotic5, gaming6, and tangible7 interactive systems, and we evaluate them with target users. If you join our group it is likely that you will work closely with end-users, collaborate with a multidisciplinary team (engineers, designers, data scientists, psychologists, clinicians), build novel and impactful interactive systems, eventually publish and present your work internationally, and see your project being used in real-life contexts.

Below, you can find the master thesis proposals that the group is offering for the year 2022/2023. Also, we present a set of stories of what members of the group did in their masters or are doing now, with the goal to provide some background to the proposals and an overall view of the opportunities you can find at Tech&People. Lastly, you can find a set of Frequently Asked Questions. If you want to know more, get in touch by e-mail, follow us on Twitter, or check our publications.

MSc Proposals 2023/2024

Motivation

Assistive technology plays a crucial role in empowering individuals with disabilities to overcome barriers and enhance their independence and participation in various aspects of life. However, accessing comprehensive and up-to-date information about assistive technology can be challenging for users, developers, and evaluators alike. There is a pressing need to build a centralised knowledge repository that provides reliable, accessible, and comprehensive information about assistive technology. By building a knowledge repository, we can create a valuable resource that consolidates information about assistive technology devices, software, and accessibility features. This repository will cater to the needs of different stakeholders, including individuals with disabilities seeking information about available assistive technology options, developers looking to understand best practices and guidelines for designing inclusive solutions, and evaluators seeking resources for assessing the effectiveness and usability of assistive technology. The repository will include diverse content such as user guides, tutorials, technical specifications, case studies, accessibility standards, and community-driven content. By building a knowledge repository about assistive technology, we aim to address the information gap, empower individuals with disabilities, facilitate inclusive design practices, and promote the effective evaluation of assistive technology solutions. This resource will contribute to enhancing accessibility, promoting equality, and ultimately improving the lives of individuals with disabilities.

What you will do

During the thesis, you will focus on building a knowledge repository about assistive technology for users, developers, and evaluators. You will curate and consolidate comprehensive information about assistive technology devices, software, and accessibility features. You will conduct extensive research, gather relevant resources, and organise the repository’s content. You will design and develop an accessible and user-friendly platform for accessing the repository. You will also engage with the assistive technology community, gather feedback, and iterate on the repository based on user needs. The final outcome will be a functional knowledge repository that serves as a valuable resource for individuals with disabilities, developers, and evaluators in the field of assistive technology.

Team

Motivation

In today’s digital era, the web serves as a vital platform for information dissemination, communication, and engagement. However, individuals with disabilities often face barriers in accessing web content due to inaccessible design and lack of awareness about accessibility guidelines. To promote inclusivity and ensure equal access to information, there is a pressing need to develop AI co-pilots that can assist content authors in creating accessible web content. An AI co-pilot for authoring accessible web content can act as a virtual assistant, providing real-time guidance, suggestions, and automated checks to ensure compliance with accessibility standards and best practices. By integrating natural language processing and machine learning techniques, the co-pilot can analyse the content being authored and provide contextual recommendations to enhance accessibility, such as suggesting alternative text for images, verifying appropriate heading structure, and identifying potential colour contrast issues. This research proposal seeks to develop an AI co-pilot specifically designed to support content authors in creating accessible web content. By providing intelligent guidance and automating accessibility checks, the co-pilot aims to empower authors, regardless of their familiarity with accessibility guidelines, to produce inclusive content that can be accessed and consumed by individuals with disabilities. User studies involving content authors and individuals with disabilities will be conducted to evaluate the effectiveness, usability, and impact of the AI co-pilot in facilitating the creation of accessible web content. The findings of this research endeavour will contribute to advancing inclusive web design practices, fostering equal access to information, and empowering content authors to proactively consider accessibility in their work.

What you will do

During the thesis, you will develop an AI co-pilot for authoring accessible web content. You will design and implement algorithms and models that leverage natural language processing and machine learning techniques to analyse web content being authored in real-time. You will develop intelligent guidance and automated checks to ensure compliance with accessibility standards and best practices. User studies involving content authors and individuals with disabilities will be conducted to evaluate the effectiveness and usability of the AI co-pilot. You will iteratively refine the co-pilot based on feedback and insights gained from the user studies. The findings will be documented in a comprehensive master’s thesis that outlines the development process, evaluation results, and potential impact of the AI co-pilot for authoring accessible web content.

Team

Motivation

In a university setting, the accessibility of learning materials is crucial to ensure that all students, including those with disabilities, have equal access to education. However, the process of creating accessible learning materials can be time-consuming and resource-intensive for educators and support staff. There is a pressing need to automate and streamline the production of accessible learning materials, leveraging technology to improve efficiency, consistency, and inclusivity. By automating the production of accessible learning materials, universities can significantly enhance the educational experience for students with disabilities. Automated processes can generate alternative formats, such as accessible PDFs, audio transcripts, and tactile graphics, from the original content, reducing the manual effort required from instructors. Moreover, automation can help ensure adherence to accessibility standards and guidelines, such as WCAG 2.1, by incorporating accessibility checks and remediation techniques into the production workflow. The automation of accessible learning materials also benefits instructors and universities by freeing up valuable time and resources. Educators can focus more on designing engaging and interactive learning experiences while relying on automated tools to handle the accessibility aspects. Furthermore, universities can demonstrate their commitment to inclusivity and accessibility by implementing automated systems that facilitate the prompt and consistent delivery of accessible materials to all students. By exploring the automation of accessible learning materials in a university setting, we aim to improve the accessibility of educational resources, reduce the burden on instructors, and enhance the educational experience for students with disabilities. This research will contribute to the development of efficient and scalable solutions that promote inclusivity, equality, and academic success for all learners. This work will be conducted in collaboration with GAPsi - Gabinete de Apoio Psicológico da FCUL.

What you will do

During the thesis, you will focus on automating the production of accessible learning materials in a university setting. You will conduct a comprehensive analysis of existing manual processes for creating accessible materials, identifying bottlenecks and challenges. Based on the analysis, you will design and develop automated workflows and tools that generate accessible formats, such as accessible PDFs or audio transcripts. You will integrate accessibility checks and remediation techniques into the automation process, ensuring compliance with accessibility standards. You will conduct user testing and gather feedback to refine the automation system and evaluate its effectiveness. The final outcome will be an automated solution that streamlines the production of accessible learning materials, benefiting both students with disabilities and educators.

Team

Motivation

Online surveys have become a ubiquitous tool for gathering data and insights across various domains, ranging from research studies to customer feedback. However, the accessibility of these survey tools remains a significant concern, as individuals with disabilities may encounter barriers that hinder their ability to participate fully. There is a critical need to develop an accessible online survey tool that ensures inclusivity and equal access for all respondents. Building an accessible online survey tool holds immense importance in promoting inclusive data collection practices. By incorporating accessibility features, such as support for screen readers, keyboard navigation, alternative text for images, and proper colour contrast, the tool can accommodate individuals with visual impairments, motor disabilities, cognitive limitations, and other accessibility needs. Furthermore, it should adhere to accessibility guidelines and standards, such as WCAG 2.1, to ensure a high level of usability and compatibility with assistive technologies. An accessible online survey tool will benefit not only individuals with disabilities but also survey creators, researchers, and organisations seeking reliable and representative data. It will enable researchers to gather diverse perspectives and insights from a broader population, ensuring that no one is excluded from participating due to accessibility barriers. Additionally, organisations and businesses can demonstrate their commitment to inclusivity by using an accessible survey tool to collect feedback from all their stakeholders. By building an accessible online survey tool, we aim to bridge the accessibility gap in data collection practices and empower individuals with disabilities to participate fully in surveys. This tool will promote inclusivity, enhance the quality and reliability of survey data, and contribute to a more equitable and accessible digital environment.

What you will do

During the thesis, you will focus on building an accessible online survey tool. You will conduct a thorough analysis of existing survey tools, identifying accessibility barriers and limitations. Based on the findings, you will design and develop an online survey tool that incorporates accessibility features, such as support for screen readers, keyboard navigation, alternative text for images, and color contrast compliance. User testing will be conducted to evaluate the tool’s usability and effectiveness for individuals with disabilities. You will iterate and refine the tool based on user feedback, ensuring its compatibility with assistive technologies and adherence to accessibility guidelines. The final outcome will be a functional and accessible online survey tool that promotes inclusivity in data collection practices.

Team

Motivation

In recent years, conversational AI agents have become increasingly prevalent in our daily lives, providing virtual assistance, information retrieval, and even emotional support. These agents utilise natural language processing and machine learning techniques to understand and respond to user queries and commands. While conversational AI agents have the potential to greatly enhance human-computer interactions, there is a pressing need to investigate and characterise their accessibility to ensure inclusivity and equal access for all individuals, regardless of their abilities. Accessibility is a fundamental principle that aims to eliminate barriers and provide equal opportunities for people with disabilities. However, the accessibility of conversational AI agents has received limited attention in research and development. Understanding the accessibility challenges and potential limitations of these agents is crucial to ensure their effective and equitable use by individuals with disabilities, such as those with visual impairments, hearing impairments, motor disabilities, or cognitive limitations. By characterising the accessibility of conversational AI agents, this research proposal seeks to identify the key factors that impact the usability and inclusiveness of these systems for individuals with disabilities. It aims to investigate how conversational AI agents handle various accessibility requirements, including providing alternative output modalities, accommodating diverse input methods, incorporating assistive technologies, and addressing potential biases or stereotypes. Furthermore, this research will explore the experiences and perspectives of individuals with disabilities in interacting with conversational AI agents, offering valuable insights into their needs, preferences, and potential areas of improvement. Ultimately, this research endeavour will contribute to the advancement of inclusive technology design and policy-making, ensuring that conversational AI agents are accessible, usable, and empowering for individuals of all abilities.

What you will do

During the thesis, you will conduct a comprehensive investigation to characterise the accessibility of conversational AI agents. You will analyse existing conversational AI systems and evaluate their performance in meeting accessibility requirements. This evaluation will involve designing and conducting user studies with participants representing diverse disability groups. You will collect data on the usability, effectiveness, and satisfaction of interacting with conversational AI agents. You will also explore the challenges faced by individuals with disabilities in using these agents and gather insights on potential improvements. The findings will be used to develop guidelines and recommendations for enhancing the accessibility and inclusiveness of conversational AI agents.

Team

Motivation

As explained by ChatGPT itself: As an AI language model, I can play a significant role in improving web accessibility by addressing the needs of individuals with reading or cognitive disabilities and assisting those with visual impairments. Here’s how: Simplifying Text: I can simplify complex text, making it easier to comprehend for people with reading difficulties or cognitive disabilities. Summarization: For individuals who have difficulty processing lengthy articles or documents, I can generate concise summaries that capture the key points and main ideas. Relevant Topic Identification: When it comes to individuals with visual impairments, I can assist by identifying the most relevant topics or key information within a webpage. By providing concise summaries or extracting key details, I can facilitate quicker navigation through web content and enable users to locate the information they need more efficiently. Alternative Formats: In addition to text-based assistance, I can also generate alternative formats such as audio descriptions or transcripts for visual content like images, videos, or charts.

What you will do

In this topic, you will explore how Large Language Models can be used to improve Web Accessibility, for instance by simplifying text, summarizing, identifying key topics, or providing alternative formats. Besides exploring different approaches (and prompts), understanding how users may interact with such an interface effectively is key to support an approach that integrates the AI-based responses in the webpage exploration experience.

Team

João Guerreiro

Joana Campos

Carlos Duarte

Letícia Seixas Pereira

Motivation

Entertainment as a whole in modern society started to be recognized as a fundamental part of our lives and well-being. Gaming has a long list of potential benefits including coping with anxiety, social bonding, or as a creative outlet. While there is a vast array of options available for playing together, players need to enjoy the same type of games.

What you will do

In our group, we have been exploring highly asymmetric games for shared play. In this topic, you will be challenged to find ways to create a sense of shared play between a large variety of different casual games. You will have the opportunity to delve deeper into the design of asymmetric games and ecosystems exploring ways to expand the concepts of shared play. This work will explore a proof-of-concept ecosystem for shared play and evaluate them with users.

Team

Motivation

People with vision impairments are able to navigate independently by using their Orientation and Mobility skills and their travel aids - white cane or guide dog. However, navigating independently in unfamiliar and/or complex locations is still a main challenge and therefore blind people are often assisted by sighted people in such scenarios. While navigation technologies such as those based on GPS (e.g., Google Maps) can help, their accuracy is still too low (e.g., around 5 meters) to fully support blind users when navigating in unknown locations. On the other hand, indoor locations do not support GPS and generally do not have a navigation system installed.

What you will do

Our goal is to use data from the crowd (other people who walked the same areas before) to learn more about the environment and be able to provide additional instructions to blind users. A possible approach is to use smartphone sensors to estimate possible paths after an individual enters a building. While the GPS may inform us that users are entering/inside a building, large amounts of data (crowd-based) from smartphone sensors may give us information about possible paths and obstacles to instruct blind users. For instance, one may learn that after the entrance there is a path going left and after approximately 10 meters there are stairs to go up one floor; and another path that goes forward (and so on). While the data from a single user may be erroneous, we plan to use data from many users to make such an approach more robust.

Team

Motivation

Mobile applications have become an integral part of our daily lives, providing convenience, information, and entertainment. However, individuals with disabilities often face accessibility challenges when navigating these applications, limiting their ability to fully engage and benefit from the digital experience. To ensure inclusivity and equal access, there is a critical need to improve the accessibility of mobile application’s navigation mechanisms. The navigation mechanisms within mobile applications serve as the backbone for users to interact with the content and functionality. However, many navigation designs may present barriers for individuals with disabilities, such as those with visual impairments, motor disabilities, or cognitive limitations. Common issues include complex gesture-based interactions, insufficient visual cues, and non-intuitive menu structures. This research proposal aims to address these accessibility challenges and enhance the usability of mobile application navigation mechanisms for individuals with disabilities. It will involve the analysis of existing mobile applications and their navigation designs, identifying common accessibility issues and usability barriers, as well as assessing the ability of the WCAG to characterise these barriers. Based on the findings, this research will focus on designing and implementing innovative approaches to improve navigation accessibility. This may include the development of alternative navigation methods, integrating assistive technologies, enhancing visual and auditory feedback, and optimising menu structures for improved usability. User studies involving individuals with disabilities will be conducted to evaluate the effectiveness, usability, and satisfaction of the proposed navigation enhancements. The insights gained from these studies will inform iterative refinements and validate the impact of the accessibility improvements. By improving the accessibility of mobile application navigation mechanisms, this research will contribute to a more inclusive digital landscape, ensuring that individuals with disabilities can navigate and interact with mobile applications with ease, independence, and equal access to information and services.

What you will do

During the thesis, you will focus on improving the accessibility of mobile application navigation mechanisms. You will analyse existing mobile applications and identify common accessibility issues related to navigation. Based on the findings, you will design and implement innovative approaches to enhance navigation accessibility, such as alternative navigation methods, assistive technology integration, and improved visual and auditory feedback. User studies involving individuals with disabilities will be conducted to evaluate the effectiveness and usability of the proposed navigation enhancements. You will iteratively refine the navigation mechanisms based on user feedback, leading to the development of accessible navigation guidelines and recommendations.

Team

Motivation

Hybrid spaces have been emerging as a way to bridge the gap between physical and virtual interactions, offering a flexible - and potentially inclusive - approach to socializing. For instance, a pair with mixed-visual abilities (one blind and one sighted person) could visit a museum where one explores it virtually while the other is in the real-world. While such hybrid environments are incorporating virtual and augmented reality technologies to enhance the user experience, they often exclude blind participants, who are unable to engage with visual stimuli used in these spaces.

What you will do

In this topic, you will explore how to support Inclusive Mixed-Reality experiences for people with mixed visual abilities, showcasing it in a proof-of-concept scenario (e.g., a museum, an escape room, or another option). The work will include developing environments with Unity, working with VR headsets and/or AR, and involving blind people in user studies.

Team

Motivation

In recent years, large language models, such as GPT-3.5, have demonstrated remarkable capabilities in natural language processing and generation. These models have the potential to address various challenges, including those related to accessibility. Accessibility barriers on the web pose significant obstacles for individuals with disabilities, affecting their ability to access and navigate online content. While efforts have been made to enhance web accessibility, manual remediation of accessibility issues can be time-consuming and resource-intensive. This research proposal aims to investigate the abilities of large language models in addressing web accessibility barriers and proposes the development of a browser extension as a practical solution. By leveraging the advanced language understanding and generation capabilities of these models, the browser extension will aim to automatically identify and rectify accessibility issues on web pages in real-time. This can include providing alternative text for images, improving semantic structure, enhancing keyboard navigation, and addressing other accessibility guidelines. The development of such a browser extension has the potential to significantly improve web accessibility by automating the process of identifying and fixing accessibility barriers. By reducing the reliance on manual remediation, it can save time and resources for website owners and developers, making accessibility more feasible and scalable. Furthermore, it can empower individuals with disabilities by providing a more inclusive and accessible online experience. This research endeavour will contribute to the advancement of web accessibility technologies, utilising the capabilities of large language models to address accessibility barriers effectively and efficiently.

What you will do

During the thesis, you will investigate the abilities of large language models, such as GPT-3.5, to address web accessibility barriers. You will conduct a comprehensive analysis of common accessibility issues on the web and explore how these models can be utilised to automatically identify and rectify these barriers. You will then develop a browser extension that leverages the language model’s capabilities to provide real-time accessibility enhancements to web pages. You will evaluate the effectiveness and accuracy of the extension through user testing and gather feedback for further improvements. You will document their findings and development process in the master’s thesis, contributing to the field of web accessibility and large language model applications.

Team

Motivation

People with Parkinson’s disease have several motor fluctuations during the day. There are normally associated with response to medication; when medication starts to wear off, symptoms start to be more visible. Detecting these changes is relevant to assess the state of the disease but also to allow clinicians to adapt medication plans.

What you will do

In this project, the student will design and develop an interactive voice response system that is able to receive and place scheduled calls to patients and lead a conversation to collect meaningful objective and subjective data for disease monitoring and support. An example is the application of a clinical scale over the phone but also enabling a conversation with a conversational agent to brief the person about a medication plan and take their doubts. Candidates to this project should have skills and interest in programming and web applications, and a motivation to work in a clinical context with frequent collaboration with clinicians and patients. The thesis will be supported by a scholarship of the IDEA-FAST project.

Team

Motivation

Entertainment as a whole in modern society started to be recognized as a fundamental part of our lives and well-being. Gaming has a long list of potential benefits including coping with anxiety, social bonding, or as a creative outlet. While there is a vast array of options available for sighted players to play casually on their smartphones, blind players are for the most part restricted to specific audio games designed only for them. Furthermore, most developers have little idea of what to do to make their games accessible.

What you will do

In our group, we have been exploring how to make games more accessible. In this topic, you will have the opportunity to delve deeper into the design of Accessible Games. You will have to explore how to make each individual game accessible, identifying patterns and limitations. You will conduct user studies early on to engage stakeholders identifying challenges ensuring user engagement and representation.

Team

Motivation

Blind people want to play mainstream digital games, despite their overall inaccessibility. In our prior work, we have investigated the strategies employed by some blind gamers to overcome the barriers found in visual-centric mainstream games. One of the main untackled barriers - especially in 3D games - is the ability to deal with the perspective of the world (or camera), for instance, due to misunderstandings of the camera direction (e.g., aiming too high or too low). Still, it is unknown how the perspective of the world influences the ability to understand the environment.

What you will do

In this topic, you will explore the different ways a camera and avatar are controlled in games (e.g., in 1st or 3rd person perspectives) and how it influences the ability to effectively understand and navigate an environment. The work will include developing environments and games in Unity, possibly VR headsets if extending this work to a VR context, and involving blind people in user studies.

Team

Motivation

Most approaches to monitor diseases are prescribed and led by clinicians with limited agency to patients. However, recent advances in mobile and wearable devices allow people to collect years of objective information about their health and fitness. How can this information be useful for the future of digital health and one’s healthcare?

What you will do

In this work, the student will design and develop applications that allow people with Parkinson’s disease to gather and visualize meaningful and personalized information about their status. The work will also explore how this data can then be made available and useful to clinicians, at the patient’s discretion and explicit consent. Lastly, the work will explore scenarios of self-experimentation, where individuals will be able to experiment behavioural changes to their day to day and assess their impact, scientifically, following a stats-of-1 paradigm. Candidates to this project should have skills and interest in data analysis and mobile computing, and a motivation to work in a clinical context with frequent collaboration with clinicians and patients. The thesis will be supported by a scholarship of the IDEA-FAST project.

Team

Motivation

Blind people want to play mainstream digital games, despite their overall inaccessibility. In our prior work, we have investigated the strategies employed by some blind gamers to overcome the barriers found in visual-centric mainstream games. One of the main untackled barriers - especially in 3D games - is the ability to deal with the perspective of the world (or camera), for instance, due to misunderstandings of the camera direction (e.g., aiming too high or too low). This poses greater difficulties in tasks that require precision, such as aiming at a specific target.

What you will do

In this topic, you will explore different solutions for controlling a camera and assisting in aiming tasks, keeping in mind not only accessibility but also engagement and a sense of fairness in the game (e.g., assisting a blind user too much in a competitive game, may make the game unbalanced and unfair). The work will include developing environments and games in Unity, and involving blind people in user studies.

Team

Motivation

People with neurodegenerative diseases face several motor fluctuations during the day. These are normally associated with the disease or response to medication; when medication starts to wear off, symptoms start to be more visible. Detecting these changes is relevant to assess the state of the disease but also to allow clinicians to adapt medication plans.

What you will do

Our group has developed data collection tools that resort to mainstream mobile devices to capture privacy-aware digital metrics about a person’s typing mechanics and mobile phone usage (including location dynamics, social communication stats, etc..). This project will focus on exploring the relationship between these typing and social dynamics and established disease scales. Candidates to this project should have skills and interest in data analysis, modeling, and visualization, and a motivation to work in a clinical context with frequent collaboration with clinicians. The thesis will be supported by a scholarship of the IDEA-FAST project.

Team

Motivation

Oral health plays a vital role in overall well-being, and preventive oral care is key to maintaining good dental hygiene. However, many people struggle to establish and maintain healthy oral care habits due to various reasons, including lack of awareness, forgetfulness, or limited access to dental care resources. There is a significant opportunity to leverage technology and develop a mobile application that promotes oral health and empowers individuals to take better care of their teeth and gums. The development of a mobile application, Usmile, dedicated to oral health promotion, holds immense potential in revolutionising oral care practices. The application can offer a range of features, including personalised oral care plans, reminders for brushing, flossing, and dental appointments, educational resources on proper dental hygiene techniques, and interactive tools such as timers and trackers. By providing users with accessible and engaging information, Usmile can help individuals develop and sustain healthy oral care habits. Usmile can also bridge the gap in dental health education and awareness, reaching individuals who may not have easy access to dental care resources. The application can provide information on common dental issues, tips for prevention, and guidance on seeking professional dental assistance when needed. Furthermore, Usmile can incorporate gamification elements and rewards to make oral care routines fun and motivating, encouraging users to maintain consistent habits. By developing Usmile, we aim to promote oral health, empower individuals to take control of their dental care, and improve overall oral hygiene practices. This mobile application has the potential to enhance oral health outcomes, reduce dental issues, and contribute to better overall health and well-being. This work will be conducted in collaboration with multiple schools of the Universidade de Lisboa: Faculdade de Medicina Dentária, Faculdade de Psicologia and Faculdade de Belas-Artes.

What you will do

During the thesis, you will focus on the development of Usmile, a mobile application for oral health promotion. You will conduct a thorough analysis of existing oral health applications, identify key features and functionalities, and design a user-friendly interface. You will then proceed with the development of the mobile application, utilising appropriate technologies and frameworks. You will implement features such as personalised oral care plans, reminders, educational resources, and interactive tools. User testing and feedback will be collected to refine the application’s functionality and user experience. The student will document the development process, challenges, and solutions in the master’s thesis, showcasing the contribution of Usmile to oral health promotion.

Team

Motivation

Virtual reality (VR) is slowly becoming mainstream, paving the way for a large number of applications in a variety of contexts, such as gaming and entertainment, education, and employee training. Despite the hype about immersive experiences that VR can offer, there are numerous accounts of its inaccessibility. In particular, VR applications rely heavily on visual feedback as an essential modality, neglecting people with visual impairments who are either being delivered with poor VR experiences or are excluded.

What you will do

Our recent work has focused on understanding how audio feedback can provide greater knowledge of a virtual environment to blind people, improving the accessibility of VR experiences. In this topic, you will explore how blind users can make use of acoustic tools (in this case, Balls) to improve their awareness of the environment. Examples include placing a Ball at a specific place, emitting sound, to serve as a landmark, or throwing a Ball to understand if there is open space ahead, walls, or objects. The work will include developing environments in Unity, working with VR headsets, and involving blind people in user studies.

Team

STORIES

Diogo Marques studied the phenomenon of social insider attacks to personal mobile devices, resorting to anonymity-preserving large-scale online studies, in his PhD. His work won awards at the most reputable usable security conference, and attracted intense media attention, with a highlight to a DailyShow sketch (from minute 3:05). During his PhD, he spent 4 months at IBM Research NY, doing an internship. He is now a user researcher at Google Munich.

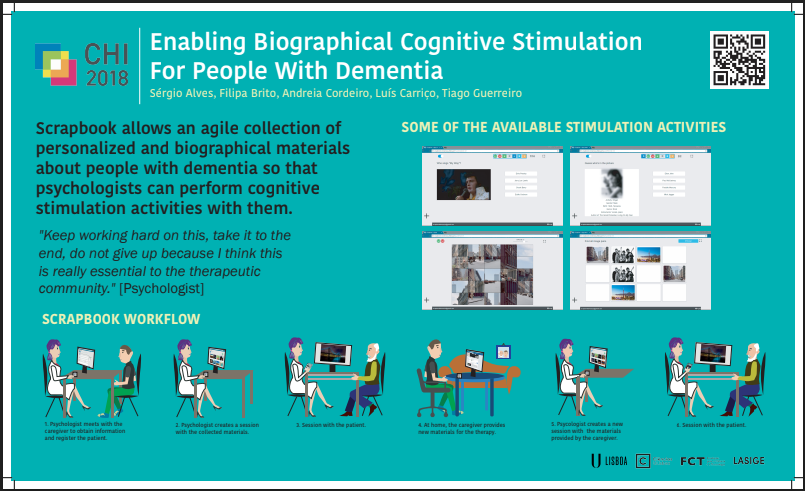

Sérgio Alves performed his master thesis in 2017 when he developed Scrapbook, a web platform to enable agile biographic reminiscence therapy, which was used for more than a year in national clinical institutions and care homes (that he presented at the WebForAll conference in San Francisco). Before starting his PhD, he was hired as an engineer in the group to maintain and improve Scrapbook, and was then hired by the Openlab (UK) to maintain the collaboration with Tech&People. He is now researching citizen-led experimentation of user interfaces (advised by Tiago Guerreiro and co-advised by Kyle Montague, Openlab, UK).

André Rodrigues performed his master thesis in 2014 working on system-wide assistive technologies. In this work, besides a novel tablet two-handed text-entry method for blind people, he was able to provide a mobile phone access solution to Miguel, a tetraplegic blind young man. He presented his MSc work at BCS HCI in Southport, UK and at CHI (the best international conference in Human-Computer Interaction), in South Korea. After that, he pursued his PhD (LASIGE’s and DI’s Best PhD Student Award 2 times in a row), focusing on human-powered solutions for smartphone accessibility. From his many contributions, we outline TinyBlackBox, a Android mobile logger for user studies that enabled a longitudinal study with blind people, and has been integrated in the popular AWARE framework. During his PhD, he spent 3 months at Newcastle University, where we collaborated in the development of an IVR health system that is now being explored in a variety of contexts. He is now a PosDoc scholar in the group focusing on accessibility, gaming, and virtual reality.

Diogo Branco performed his master thesis in 2018 where he focused on extracting metrics from wrist-worn accelerometers and designing usable free-living reports for neurologists and patients with Parkinson’s Disease. The platform has been used since then for a period over 18 months (and going). He presented the outcomes of his MSc at CHI 2019 in Glasgow, and started his PhD in 2019. His work enabled ongoing service contracts with pharmaceuticals and the usage of wearable sensors in clinical trials.

Hugo Simão is an industrial designer doing his master thesis on robots to support people with dementia. In this process, he designed and developed MATY, a multisensorial robot that is able to project images, emit fragrances, sounds, and walk around autonomously. He published his preliminary work at CHI 2019, in Glasgow, and other parallel projects on robots for older adults (e.g., Carrier-pigeon robot at HRI 2020). Hugo is starting his PhD in the group in the next semester, and was accepted for a 6-month internship at Carnegie Mellon University (postponed due to Coronavirus).

David Gonçalves is a current master student (started in September 2019) in the group working on asymmetric roles in mixed-ability gaming. Until now, he performed formative studies with blind gamers (and gaming partners), trying to understand their current gaming practices, and has developed his first mixed-ability game using Unity. He submitted his first full paper to an international conference (under review), and joined Tiago in a visit to Facebook Research London, where they were invited to present their work at the Facebook first Research Seminar Series.

Ana Pires is a Postdoc scholar at Tech&People. She is a psychologist working on Human-Computer Interaction, with a particular focus on inclusive education. In her work, she explores how tangible interfaces improve how children learn, for example, mathematical or computational thinking concepts. In the group, she has been working with Tiago and master students to develop solutions for accessible programming, among many other projects.

Frequently Asked Questions

I am considering doing a thesis in the group but I am not sure I have the technical skills required. Am I required to know how to program wearables, develop games, know computer vision, or how to resort to machine learning algorithms?

We are a human-computer interaction research group with a strong technical component. Besides working with end-users, we build robust user-facing interactive systems to be evaluated in realistic settings. However, the group already has a set of skills that enables newcomers to have a swift onboarding experience. When needed, we provide internal workshops, pair master students with more experienced researchers, and provide constant support. The group also includes designers, engineers, and a psychologist, to provide support to projects that go beyond the expected knowledge of a student.

What are the future prospects for a student doing a master’s with your group?

User research and user interface development are of the most desired expertise in the market of today. If you search for job offers, you will consistently find user research positions among the most well paid. Our graduates, at the end of their masters, have only faced the challenge of selecting which offer they preferred. While some were eager to join the portuguese market (e.g., Feedzai, Farfetch), we also have ex-master students staying with us as engineers with competitive contracts, several joining us for a PhD, and some going abroad (e.g., Google, Newcastle University).

I want a scholarship. Do you offer those?

The group is currently engaged in four european projects, 2 national projects, and several service contracts. Most of our students are supported by scholarships in those contexts. All students have the opportunity to apply to the LASIGE scholarships; at the end of those scholarships, according to how the work is going, some students are offered a continuation of their financial support. Some projects are supported from the start, if they are developed in the context of a funded project task. From the stories above, all students have been fully supported during their studies. In our current team, more than 50% of the students are supported by scholarships.

What’s the deal with conference travels and internships? Do I have to pay for those?

No. Conference publications are fully supported by the group (travel, hotel, registration, per diem) when submission to the conference is agreed with your advisor. Internships are normally arranged with your advisors and the visited institution, and are also fully covered by funding schemes (e.g., Erasmus+) or by the visited institution/company.

I want to work in the X project but I will need Y technology and a PC with special hardware. Do you have those?

If a proposal requires specific tech/materials, it will be provided, including computers/laptops to work with them (if needed). The group already has access to a wide set of resources like eye-tracker, mobile devices, physiological computing kits, IoT kits, commodity robots, bluetooth beacons, VR headsets, as well as cloud computing, panel, and transcription services.

How can I know more about the group?

You can check our publications, team and research pages, to have an idea of our published work and mission. We also invite you to check our lab memo to have a feeling of how the group operates. Follow us on Twitter. And, for any other business or more detail, come talk to us.

What do you expect from your students?

The group welcomes motivated strong-willed students to pursue impactful work. While it is expected that getting a position will be competitive in regards to a student’s background, we are mostly looking for people that are motivated to work and to make a difference. If you just want to finish your masters in the easiest way possible, this is not the group you should be applying for. Conversely, if you are eager to learn a variety of skills, be challenged, be exposed to real life problems, and seek for fair and effective solutions to solve them, come work with us.